Welcome! eePRO is the global online community for everyone who cares about education and creating a more just and sustainable future. Connect with other EE professionals, participate in discussions in eePRO groups, and find and share resources, events, and opportunities! Visit our popular jobs site! Find a job. Post a job. Explore careers. Subscribe.

Explore Content

What's New on eePRO!

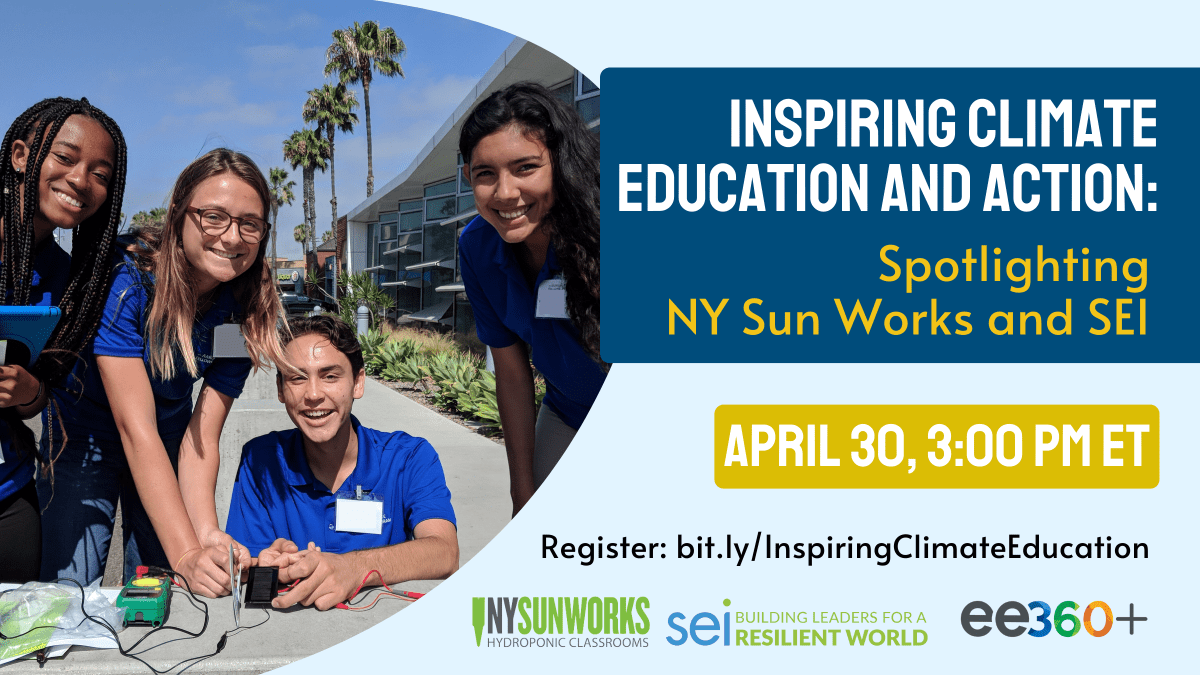

Image

Inspiring Climate Education and Action: Spotlighting NY Sun Works and SEI

April 30, 2024, at 3:00 PM ET

Join us for an inspiring session to highlight the work of two game-changing organizations that are building leadership for the future and advancing climate change education and climate solutions.

Image

Let's Get Outside For 5

When students go outdoors, they become more confident, attentive, creative, and cooperative. #OutsideFor5 is a pledge campaign that encourages educators to incorporate outdoor activity at least 5 minutes a day, 5 days a week.

Image

Take Action for EE Today!

Action Alert

Calling all educators and advocates! Ask your legislators to support funding for environmental education.

Latest News!

Check out eeJOBS: The leading jobs listing service for the environmental education field

Search for a job. Post a job. Explore careers. Subscribe.

New GEEP E-Book Chapter: The Global Evolution of Ocean Literacy

From Ssese Islands to Global Stages: My Safari in Environmental Advocacy and the "TEEN-2024" Project

2023 Changemaker Grantees

Stay Up-To-Date with eeNEWS & eeJOBS!

Sign up for eeNEWS, NAAEE's biweekly newsletter promoting EE events, announcements, grants, and resources. Become an NAAEE member to receive additional exclusive, advanced content.

Subscribe to eeJOBS, NAAEE's weekly newsletter listing new jobs added the previous week.

Contact Us!

We want to hear from you! If you have suggestions, concerns, or other inquiries, contact us at eePRO@naaee.org.